Apple AI on Their Own Terms and Edward Snowden Blasts OpenAI's New Hire

A few tidbits of artificial intelligence around the world.

Morning y’all!

It’s another week with promise for tons of artificial intelligence action (and I’m sounding a bit too much high-energy)! I guess I’m just a bit pumped, that’s all.

I hope you have a really good one!

※\(^o^)/※

— Summer

Apple is trying to create the perfect “AI icon” that’ll represent the entire industry as a whole and they have ideas. There isn’t a standard quite yet.

There’s an AI bill called California’s SB 1047 that’s going to require safety testing for AI models that cost over $100m to train. Will this kill innovation? Probably not. Definitely not a great thing for speed.

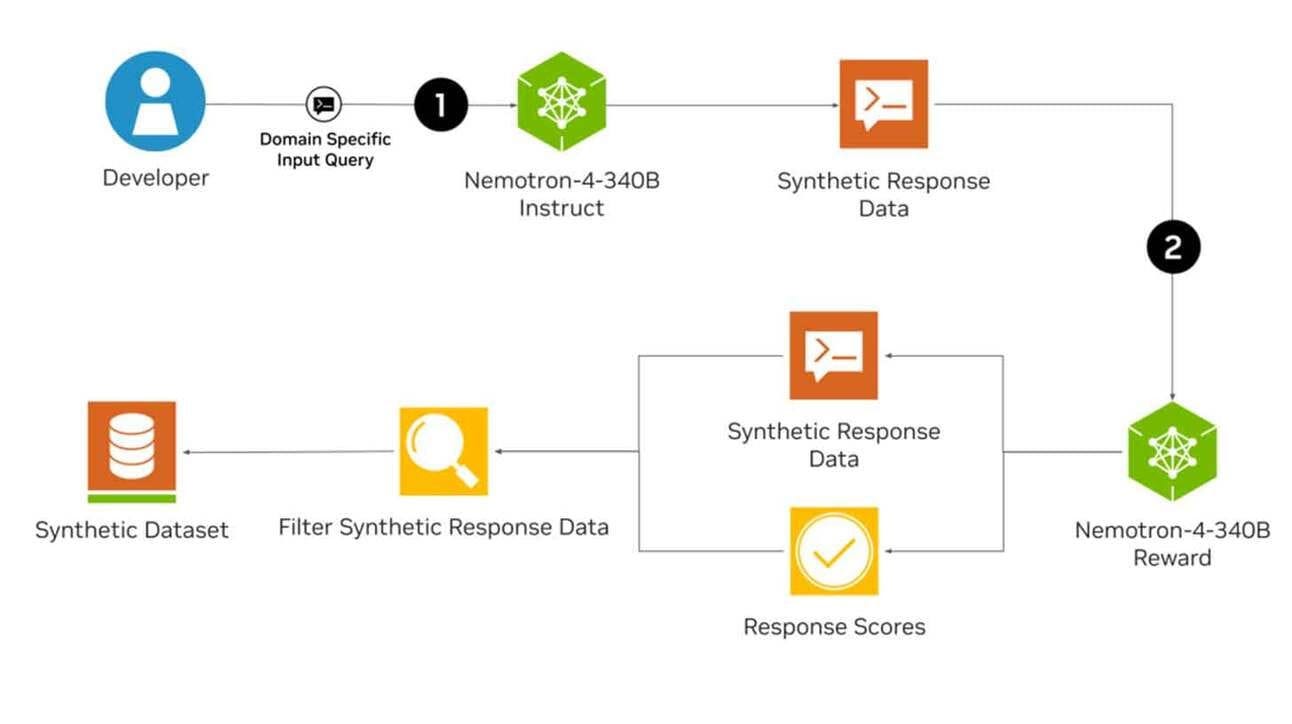

NVIDIA has announced Nemotron-4, a new open source language model designed to build big AI apps across industries.

Ex-Robots, a Chinese startup, is developing ultra-realistic humanoid robots, costing upwards of $275k per unit.

Sam says that OpenAI could become a for-profit company which could lead to IPO eventually, or some large liquidity event for everyone. Everyone smells money over there at OpenAI and I bet it’s going to continue to cause issue for folks.

Shanghai AI Lab released a new algorithm called MCT Self-Refine which allows GPT-4 level performance on complex math. Speed and efficiency is continuing to be the major battleground for many models as people “race towards the bottom” on these types of things.

FastCompany has 6 free, intro courses to AI via LinkedIn learning such as assistants, podcsting, and prompt engineering for Excel and ChatGPT.

Edward Snowden just went hardcore mode on OpenAI saying that their hiring of the NSA Director is a “willful, calculated betrayal of the rights of every person on earth.” Ouch. That hurts.

McDonald’s is removing AI-powered drive-thru ordering because it’s not working as well as they had hoped. Not everything AI is going to work, especially the first time through new human-centric workflows.

Google uses AI to analyze over 8,000 YouTube ads and here’s what they found (on their own platform):

The analysis revealed the importance of diverse representation, individual expression, and community-centric narratives in successful ads. Insights also highlighted the use of strong hooks, pop culture references, and catchy music tracks as effective strategies.

It’s not hallucinations; it’s bullshit. Especially ChatGPT:

The researchers argue that ChatGPT and other LLMs clearly spread "soft bullshit". The systems are not designed to produce truthful statements, but rather to produce text that is indistinguishable from human writing. They prioritize persuasiveness over correctness.

Oh well. I mean, even the most capable LLM’s can struggle sometimes:

Researchers have used a simple text task to expose serious weaknesses in the reasoning of current language models such as GPT-4, Claude, and LLaMA. The task could be solved by most adults and elementary school children.

Children are more capable than we oftentimes believe.

Finally, some long-form thoughts on Apple’s entry into the world of AI:

Indeed, that gets at why I was so impressed by this keynote: Apple, probably more than any other company, deeply understands its position in the value chains in which it operates, and brings that position to bear to get other companies to serve its interests on its terms; we see it with developers, we see it with carriers, we see it with music labels, and now I think we see it with AI. Apple — assuming it delivers on what it showed with Apple Intelligence — is promising to deliver features only it can deliver, and in the process lock-in its ability to compel partners to invest heavily in features it has no interest in developing but wants to make available to Apple’s users on Apple’s terms.

Of course, it’s on their own terms. Per usual.

Have a great one folks!

※\(^o^)/※

— Summer