AGI in 3 Years, AI + UI / UX, Your Career is Meaningless, and Reddit + OpenAI

A few thoughts, links, and things to chew on this weekend.

Hey y’all!

Early morning and we’re up and at ‘em! Glad to have a great nights rest since today is pretty packed. I hope the week has been really good to you and that you’re making strides toward your goals!

Let me know if I can help. Have a great weekend!

※\(^o^)/※

— Summer

Apple is innovating with new features in iOS 18: AI-powered eye tracking, haptics for music (cool!), and vocal shortcuts among others. Stay tuned. Things are heating up here and it’ll appear on your phone shortly.

OpenAI just signed a deal to access real-time content from Reddit’s data API, which will now allow OpenAI to link discussions from the site within ChatGPT. Advertising is a big part of this deal, of course.

OpenAI cofounder predicted that AGI could arrive in 2 to 3 years. What is that about? Here’s your TL;DR:

John Schulman discusses the future development of artificial intelligence (AI) models, focusing on the challenges and potential progress in achieving human-level AGI. He highlights the importance of understanding a model's limitations and the need for coordination among entities developing AGI to ensure safety.

Schulman also emphasizes the significance of reasoning, developing knowledge through introspection, and active learning in advanced AI systems. Schulman shares his experiences in developing language models at OpenAI, particularly the evolution from instruction-following models to the conversational chat assistant, ChatGPT.

He discusses the potential for significant improvements in language models through post-training and the role of curiosity and a holistic understanding of the research stack in advancing this field. However, he acknowledges the challenges of data limitations in developing larger language models and the concept of transfer learning.

Schulman expresses optimism about the potential for continued progress in language model research but emphasizes the importance of both empirical experimentation and first-principles thinking. He also discusses the differences between pre-training and post-training in AI, with pre-training focusing on imitating content on the internet and maximizing likelihood, and post-training targeting narrower ranges of behaviors for more complex tasks.

By the end of the year, models are expected to become significantly better, capable of handling more complex tasks like carrying out entire coding projects and learning from longer projects through various training methods. However, there will still be bottlenecks and limitations, including human expertise in areas like taste, dealing with ambiguity, and mundane limitations related to the model's affordances.

Schulman also touches on the need for websites to be designed for AI use, with a focus on text-based representations and good UXs for AIs. He mentions that language models have shown the ability to act within similar affordances as humans and that there have been instances of generalization and transfer learning in post-training.

In conclusion, John Schulman discusses the future development of AI models, focusing on the challenges and potential progress in achieving human-level AGI, the importance of understanding a model's limitations, and the need for coordination among entities developing AGI to ensure safety. He also emphasizes the significance of reasoning, developing knowledge through introspection, and active learning in advanced AI systems.

Ok. Cool. Sounds like fun.

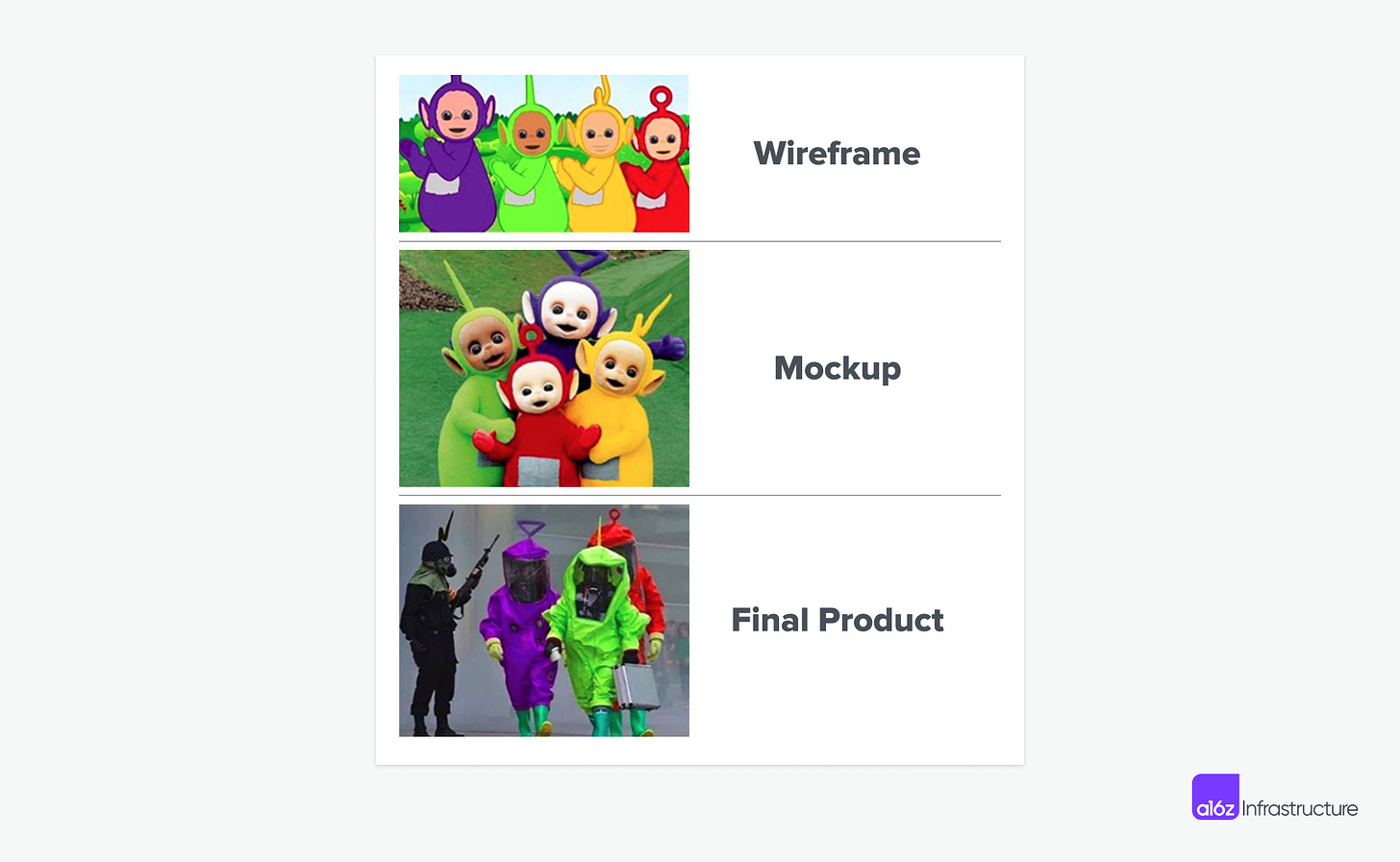

The folks at a16z talk about how AI is remaking or “redesigning” UI and UX in design, especially as it relates to building functional apps and the time required.

This one is funny. Thanks The Daily Show. You make life better.

Has Anthropic solved “prompt engineering”? You can turn simple descriptions into advanced and more sophisticated prompts optimized for LLMs. Cool.

How do you poach talent from other AI tech companies? Easy:

Oh no. The way you poach people from big tech companies is you tell them that their career is meaningless and that they’re wasting their lives on something that doesn’t matter.

— Palmer Luckey

That’s one way of doing it. 🤣

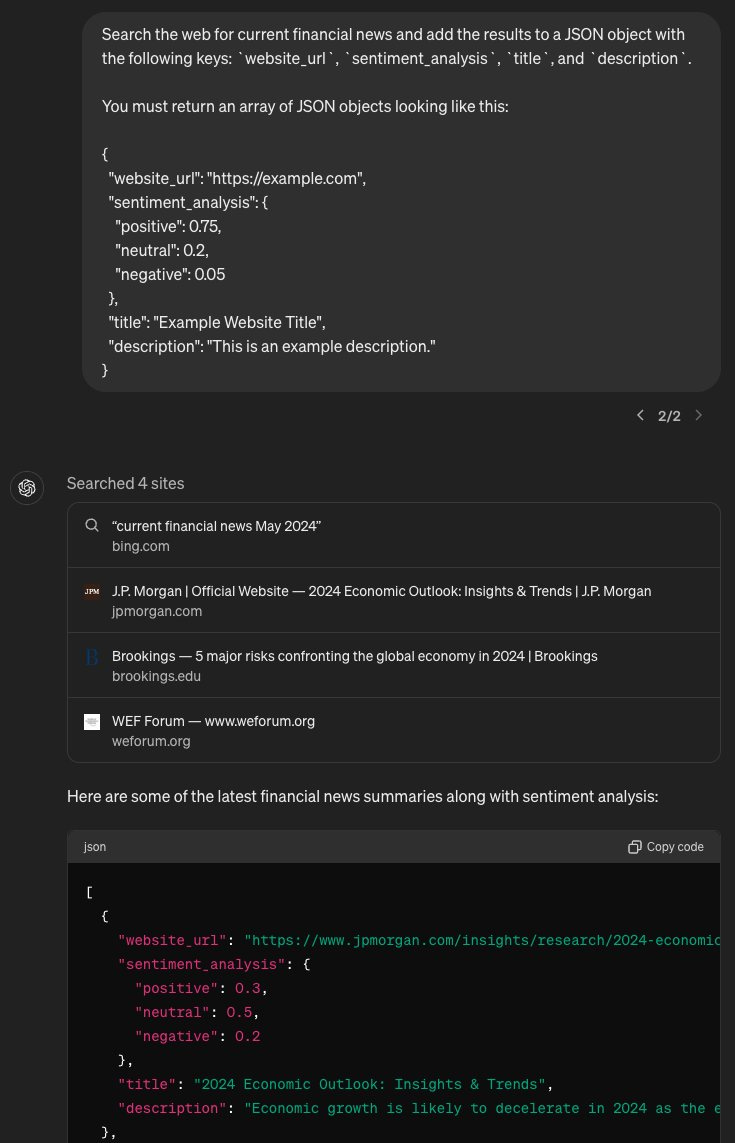

Apparently you can use GPT-4o as a webscraper. That might come in handy.

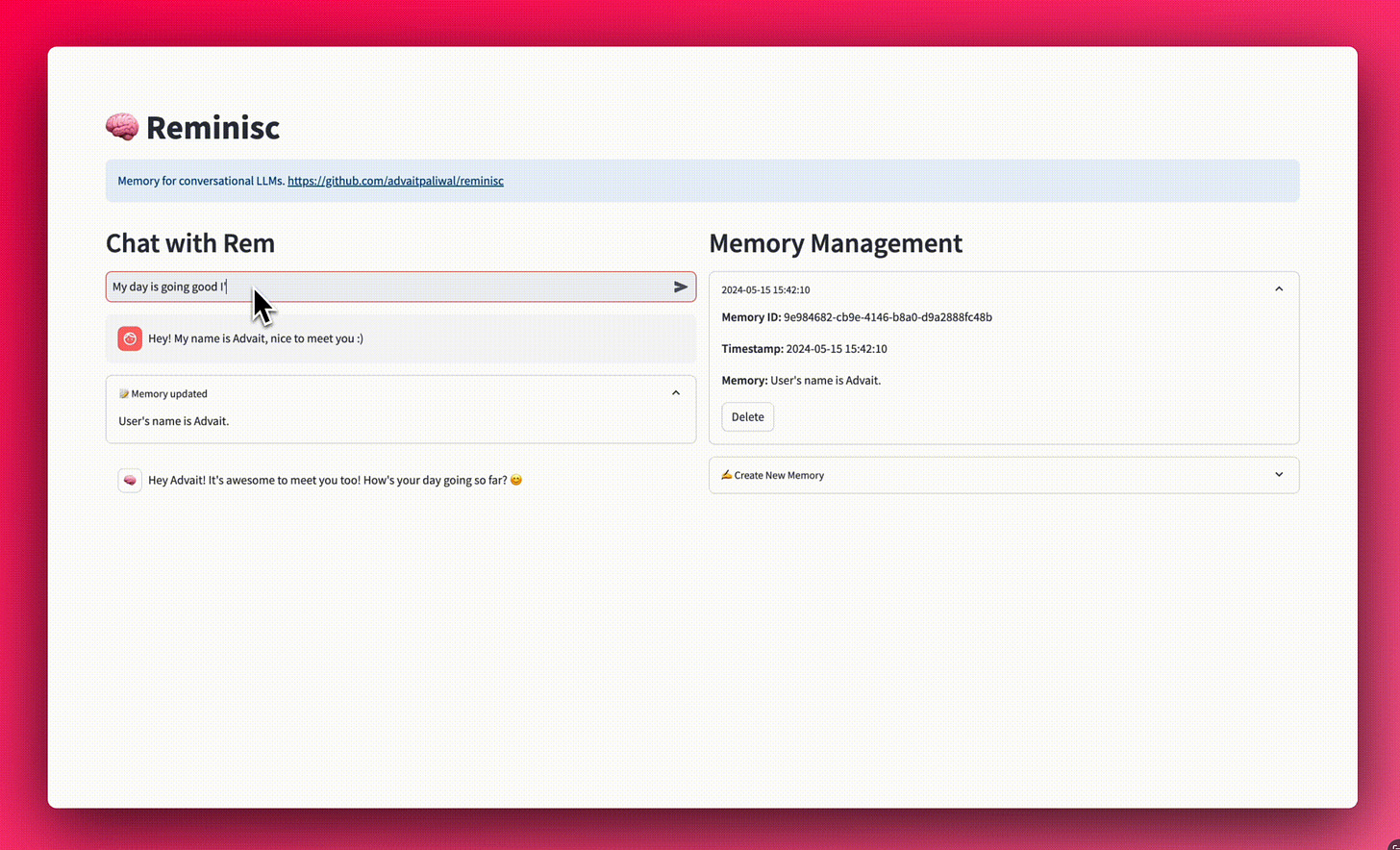

Reminisc is an OpenAI inspired open-source memory framework for LLMs.

Testing ChatGPT-4o and maths. Give it a read.

And that’s it friends. Have a great weekend!

※\(^o^)/※

— Summer